Crawl depth is one of the critical SEO factors, which control the way your website is indexed by search engines. Optimizing the crawl depth will result in better site visibility and this could be achieved by managing it properly.

Screaming Frog is an SEO tool capable of offering a lot in terms of helping website owners and digital marketers to accurately diagnose and work with crawl depth. This article will focus on how to audit crawl depth with the help.

Also, we will be outlining how you can do it effectively to make your site easily indexable and with a higher search engine ranking. Even if you’re going for SEO packages, you can also use this guide to build a strong SEO foundation. It will help you improve your website’s overall user experience. Let’s get started!

What is Crawl Depth?

Crawl depth means the number of link-clicks a search engine crawler requires to go from your home page to a particular page. Crawlers are used by the search engines to identify and then crawl the web pages. They wander from link to link across your site until they’ve gone through every page on your site.

Normally, you would prefer to see the most crucial pages, including the products or services sections, to have a crawl depth of 1 or 2, therefore they are accessible when the homepage is entered. Web pages with greater CDI may not be crawled by the SEs and may, therefore, have minimal visitors’ traffic and visibility.

Whether you go for digital marketing consultancy services or do SEO yourself, understanding and managing crawl depth is necessary for maximizing your website’s potential.

Why is Crawl Depth Important?

Crawl depth is important as it defines how search engines treat your site and the position that it gives to your site. Such pages are qualified as more important than the other and contain more probability to be crawled and indexed by the search engines.

This means such pages will rank well in the search engine result pages and consequently attract more organic traffic. It will be disadvantageous if essential pages are far down in the structure of the site, in which case, they will rarely be accessed, thus, poor indexing, and visibility.

Also, a limited number of depth on the site enables users to access the desired information within a short time without having to click through other pages. First, it enhances the usability of the site; this is with respect to the fact that visitors are likely to spend their time browsing in your site if they get a chance to find the content they are searching for easily.

Not only do these practices enhance ranks in the existing search engines but also increase visitors’ interaction and satisfaction. Therefore keeping this factor in mind, managing crawl depth becomes one of the most crucial tasks. For any website owner as far as SEO is concerned, while at the same time user experience should also not be ignored.

Steps to Audit Crawl Depth Using Screaming Frog

Configure Initial Settings

In view of this, the correct setup of Screaming Frog has to be made in order to obtain the correct data regarding the crawl depth analysis. Begin by launching and this shall be followed by the clicking of the ‘Configuration’ located on the toolbar.

You can also define custom extraction patterns and define file extensions that should be and should not be included in the crawl. This step makes sure that while crawling the web only the necessary information is gathered.

Start the Crawl

In the case of starting a crawl, you just type in the URL of the website that you would like to audit in the text box positioned. The top of the main window of the Screaming Frog and then click “Start. ” Screaming Frog will then proceed and crawl the website under consideration.

Go through all the links that are disclosed for it and collect the data about each of the web pages. It comes across. In case the presented site is large and depends on the complexity of its structure. It might take quite some time for this procedure. You can observe the progress through the progress bar that is located at the bottom part of the window.

Analyze Crawl Depth Data

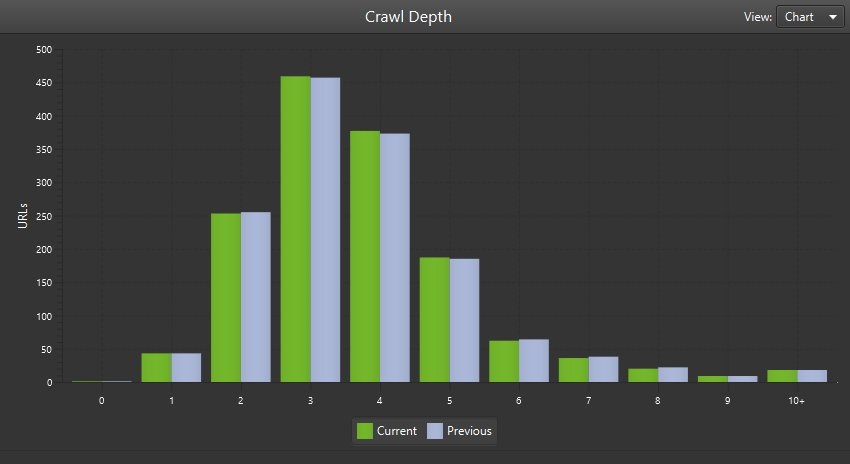

Once the crawl is complete, go back to the ‘Site Structure’ tab in Screaming Frog and click on ‘Crawl Depth. ‘ It reveals how many clicks are required to reach the web page starting with the home page indexed as 0.

There appears to be the fact that the nearer a given page is to the home page. The more link equity it will receive and the higher the frequency. It will be crawled by search engines. This tab allows for the identification of the structure of the site. With a certain number of URLs at a particular depth level and the percent of such URLs.

Identify Deeply Nested Pages

A highly internalized page, which has a high crawl depth. It may be crawled and indexed not as effectively as the page closer to the website’s main page. When in the Crawl Depth subtab, arrange the data to filter out URLs that are several links from the root.

It is specifically important to focus on the high-priority pages. Which should usually be closer to the home page, and, therefore, more visible for indexation. Such steps show you the exact areas of site architecture that may require attention and improvements.

Optimize Internal Linking

The importance of increasing the crawl depth usually entails the necessity to reconsider internal link building strategy. Based on what you learn from the Screaming Frog SEO Spider, which pages may require more internal links.

For instance, if there is a specific product page which is fairly deep in the site structure. One might think about placing links to that particular page on the first level of the website or on the category pages.

To summarize, if one is to audit or investigate a website to comprehend the crawlability of the site in question. One should use Screaming Frog to map the site. In this way, analyzing the crawl depth and thus, identifying deeply nested pages. It can help in internal linking optimization for better visibility and indexing by SE.

Read More: 314159u: An Ultimate Shopping Destination!